Bosses already live in fear that a verbal misstep will be recorded and go viral. Now they can look forward to a new nightmare in which artificial intelligence analyzes their rhetorical stumbles and suggests they’re no longer sharp enough to lead.

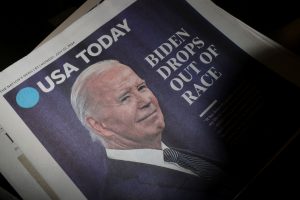

If that sounds like the premise of a new dystopian workplace drama , consider this: The same week a halting debate performance shattered public confidence in President Biden’s acuity , the journal Alzheimer’s & Dementia published a study showing AI can use a person’s speech patterns to forecast Alzheimer’s disease with roughly 80% accuracy, six years before the condition becomes diagnosable.

Lead researcher Yannis Paschalidis, a distinguished professor of engineering at Boston University, told me the current version of his machine-learning tool relies on a person’s responses to a set of questions in an oral exam administered by a medical professional. It can’t determine whether someone is on the road to dementia from, say, a recording of a CEO struggling through an all-hands meeting.

Not yet, that is. Paschalidis envisions a near future of people using AI-powered mobile apps to raise red flags on themselves or others. The risk of a false positive is outweighed by the potential harm of cognitive decline going undetected, he says.

“If you can develop tools to democratize screening, then more people would have access to better care,” he says. Neurologists—not AI—should make final diagnoses, he adds.

The advances still have the potential to unfairly cast doubt on people’s competence, even as they help some patients get a jump on treatment, says Dr. Bradford Dickerson, a neurologist at Massachusetts General Hospital.

“It’s going to be tricky to figure out what is useful to empower people to take better control of their own health versus what needs professional interpretation,” he says.

Suppose you lose your train of thought while battling jet lag or blank on a colleague’s name after pulling an all-nighter. AI could deem such gaffes signs of cognitive decline—accurately or not—and an employee who posts the boss’s AI assessment on social media could set off a wave of scrutiny.

“This is a topic that deserves attention and deserves to be put through its paces with regard to ethics,” Dickerson says.

That’s personal

Candidates often go through a battery of tests to show they’re fit for duty, and AI is bound to make the process feel even more invasive.

Research teams at West Virginia University and the University of California San Francisco are each testing AI tools that use cholesterol levels, bone density and other biomarkers found in blood to warn of Alzheimer’s disease years in advance. The tools, still being tested, aren’t yet used by doctors.

For people who might receive a troubling AI prognosis, “I don’t think the message is ‘Quit your job right now,’ ” says Alice Tang, lead author of the UC San Francisco study, published in February in the journal Nature Aging. “It’s more about setting expectations and planning for what your future might look like.”

Steven Blue, chief executive of railway equipment maker Miller Ingenuity, submitted to a psychological assessment before he was hired. His board of directors receives reports from his annual physicals, including blood work and EKG results.

Blue, 73, says he trusts that most corporate boards wouldn’t terminate a solid CEO based on an AI prediction of future dementia. Still, executives negotiating their next contracts would be wise to get limits on companies’ use of AI health models in writing, he adds.

“There’s a line somewhere between your fiduciary responsibilities and your personal privacy,” he says. “If the board said, ‘You could be a vegetable in five years, so we’re going to fire you or compel you to have this kind of treatment, I would just say, ‘I quit.’ ”

On the flip side

One thing could stop AI from sounding the alarm about your cognitive ability: more AI. Most business leaders don’t have the White House image managers Biden had to shield him from scrutiny in the months before he dropped his re-election bid . High-tech assistants, though, are the next best thing.

OpenAI’s ChatGPT and Google’s Gemini can instantly draft responses to questions submitted by employees during town-hall meetings. For example, I prompted ChatGPT to “write a CEO’s answer to this question from an employee: Why is the company laying people off after record profits last quarter?”

“I understand that recent news about the layoffs, despite our record profits last quarter, is both surprising and concerning,” began the suggested answer, generated in a split-second. “I want to address this head-on and provide clarity on our decision-making process.”

The rest of the response was a deft mix of empathetic lines about “supporting those affected” and business rationales like “market dynamics” and “strategic realignment.” For managers with diminished abilities to think on their feet, AI text generators are a useful crutch .

Then there are voice-cloning tools made by Descript and ElevenLabs that can be trained to mimic your delivery and fix your flubs. If you “um” and “uh” your way through a recorded PowerPoint presentation , you can feed the software a corrected script. The resulting audio sounds perfectly smooth and virtually indistinguishable from a human voice, according to the companies’ samples.

Glued to your notes while speaking? An additional Descript feature can edit the direction of your gaze and lend the impression of talking direct-to-camera in total command of the material.

Voice and video editors only work on recordings, but AI tools could make you appear sharper on the spot, too. Live speech generators developed for amyotrophic lateral sclerosis patients could be adapted for others, AI researchers say. Imagine an upgraded version of the Stephen Hawking voice helping a boss who’s lost a step sound as smart as the late, witty physicist.

Write to Callum Borchers at callum.borchers@wsj.com