Fake nude photos sparked an uproar in a New Jersey high school last year. Six months on, the images—created with artificial-intelligence apps that merge a classmate’s face with a naked body—are rippling through schools of all kinds.

For the people depicted in those images, often young women, the pictures set in motion feelings of shame, fear and a loss of control. Their parents are often totally unaware.

High-school girls in Westfield, N.J., where the images were shared in group chats, said they were humiliated by the experience. Subjects of these AI-generated images also bear judgment and bullying, according to interviews and surveys conducted by the Human Factor, a conflict-resolution strategy firm whose founders spent time with students.

“They would be looked at as dirty, despite their word that it was fake,” said one Las Vegas teen who participated in the research project. “No matter what, there would be people who don’t believe them.”

The researchers spent two days in Westfield, an affluent suburb, in February, interviewing students, parents and other community members. The firm then surveyed more than 1,000 students, educators and parents nationwide in March and April, and came up with guidance for parents and educators. (More on that below.)

When students were asked who would be more affected by fake nudes, the person who created them or the victim appearing in them, 73% said the victim.

“Even if it was debunked as a fake image, the subject would still get bullied and made fun of,” a student in Eldorado, Ill., said.

“Their morals would be questioned,” a student in Seale, Ala., said.

“People will see them differently,” a student in Levittown, N.Y., said.

Until recently, few students knew that photos of themselves on social media could be rendered nude by other kids using AI. Dozens of “face swapping” and “clothes removing” tools are now available online at little or no cost. The situation is adding to teens’ stress levels.

Images have impact

Images are often remembered more than spoken or written words, because of a phenomenon called the picture superiority effect . Even when photos are deleted or disappear in messaging apps, the researchers found, classmates aren’t likely to forget them. And the images generated are so realistic it’s almost impossible to tell what’s fake.

Two-thirds of the students surveyed by Human Factor said it was likely they’d assume a photo was real if it looked real, especially if they thought the victim was the type of person who would share a nude photo. Only one third said they would ask the person if it was real.

The researchers also found that the only people who can effectively report this to authorities are fellow students, because photos are either shared in private group chats or on disappearing-message platforms such as Snapchat.

Parents rarely if ever see the images, unless they are aggressively monitoring their kids’ chats.

Without an adult authority in the mix, the fake nudes can spread with few speed bumps. Students in the survey said they wouldn’t report these images to school authorities if they saw them in a group chat for fear of getting in trouble. Students also said they would only confront someone who created the images if they knew the person well.

Spreading AI fakes

Since I reported on the Westfield High School case in New Jersey in November, similar incidents have taken place at schools across the country.

Five students at a Beverly Hills, Calif., middle school were accused of creating AI-generated nudes of classmates and expelled, as was a student at a Washington state high school . Two teenage boys at a Miami middle school who allegedly created and shared fake nudes of classmates were arrested and charged with felonies. Several other schools have investigated reports of such images being shared.

In March, the Federal Bureau of Investigation issued a public advisory stating that it is illegal for anyone—even teens—to use AI to create child sexual-abuse material.

This might just be the beginning. Approximately 60% of the students the researchers surveyed said they believe their peers would use AI to generate fake nude images.

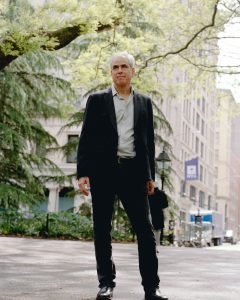

Kathleen Bond , a partner at Human Factor, grew up in Westfield, and graduated from the high school in 2014. She and Rachel Moore Best , the firm’s founder, wanted to understand how students would react to such incidents, what would prompt them to report such images and what parents and educators could do to prevent it from happening at other schools.

They funded the research themselves and plan to share their findings and recommendations with school districts, teacher organizations, the national PTA and youth groups.

What can be done

The researchers urge parents to talk to their kids about proper use of AI image-generating tools, using this conversation guide . They suggest schools do the following:

• Update student codes of conduct to include fake nudes, spelling out the consequences for creating and sharing generated nude images.

• Encourage and enable students to report when they see one of these images.

• Perform role-playing exercises with students to explore hypothetical scenarios. The researchers designed these 45-minute exercises for schools to use.

• Run a campaign, in which students experiment with AI to generate images, to raise awareness of appropriate and inappropriate use of generative AI.

—For more WSJ Technology analysis, reviews, advice and headlines, sign up for our weekly newsletter .

Write to Julie Jargon at Julie.Jargon@wsj.com